Cogs 300 Unity Labs

A Unity based set of labs and a machine learning tournament designed for use by third year university students in Cognitive Systems 300 at the University of British Columbia

See Code

See Code

The Project

Project Overview:

The ongoing project, a collaboration between the Cogs 300 course and the UBC Emerging Media Lab, aims to enhance the learning experience and outcomes of the robotics labs. My role in this project involved serving as a Unity developer, pedagogical advisor, and subject matter expert. Working with undergraduate students, industry expert advisors, and the course professor, we embarked on redesigning labs 1 to 6 and preparing for the robot tournament in labs 7 to 9.

Lab 2 - Physical Symbol Systems

Learning Goals:

- Students should understand how information can be explicitly represented symbolically

- Appreciate how pre-planned movement & instructions can be too inflexible or run into edge cases when trying to solve different types of problems

In the previous version of this lab, students would give a list of instructions to a robot arm, which would then rotate in 3D to hit a series of balls. This task was not ideal, as students would often be confused by the 3D rotations, which was not part of the learning goals.

In the new version, students have 2 tasks:

- Provide a hard coded list of turning instructions to a virtual car

- Create an algorithm which will decide to turn left or right based off distance sensor inputs

This new version successfully improves upon teaching the learning goals, as there is less confusing extraneous information (3d rotations). It also provides scaffolding for future labs 4 and 6, and the robot tournament, which use the same/similar vehicles and movements.

Lab 3 - Emergence

Learning Goals:

- Students should understand how simple rules can combine to create behaviour which is more than the sum of their parts

- Understand how slight variations on the rules can have dramatic consequences on the form of the final system

In the previous version of this lab, students would code an algorithm which results in the synchronized flashing of several fireflies. However, the underlying algorithm being built on was exceptionally slow and as a result could only support 3-6 fireflies total before experiencing massive frame rate drops.

In the new version, we have completely redesigned the underlying algorithm to be more efficient, and added in additional flocking behaviour for the fireflies.

This new version successfully improves upon teaching the learning goals, as students are better able to appreciate how behaviour emerges at scale. It also provides additional, interactive examples of emergence through the flocking behaviour.

Lab 4 - Simple Neural Networks

Learning Goals:

- Students should understand how a perceptron behaves as a simple, single-layer neural network

- Understand how labeled data is used to train a neural network

- Understand the idea of linear separability,

In the previous version of this lab, students would train a perceptron to eat or avoid fruit depending on attributes like color or if it was rotten. This version of the lab was not very transparent or intuitive for students to understand.

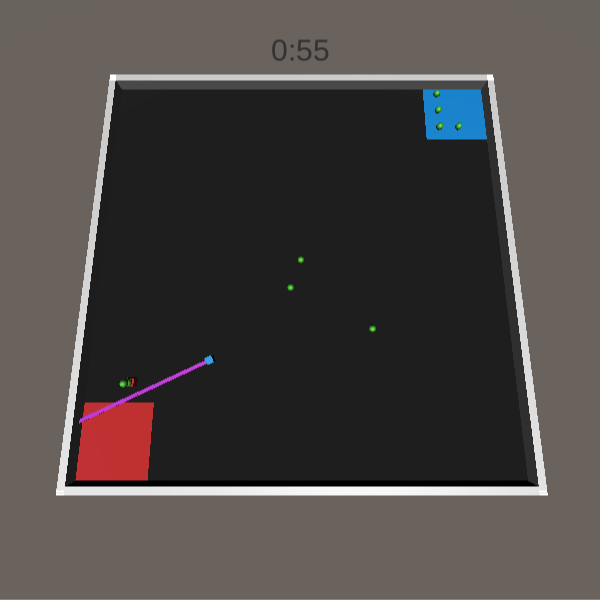

In the new version, students will get a car to navigate through a maze by training a perceptron to associate distance sensors with the outputs of turning left or right. Students will code part of the algorithm which learns from labeled data and adjusts the weights of the perceptron. Afterwards they use the arrow keys to provide the labeled training data set to the perceptron.

This new version successfully improves the learning goals, as the task and how the perceptron is trained both become much clearer. It also builds upon the scaffolding in lab 2 and further prepares them for lab 6, allowing them to compare and contrast different approaches to the same problem.

Lab 6 - Embodied Cognition

Learning Goals:

- Students should understand the connection between the form (body) of an agent and its function (cognition)

In the previous version of this lab, students would solve a maze using different movement types (sliding vs jumping). However, this was difficult to connect to cognition specifically.

In the new version, students will simulate the behaviour of a paper read in class, where robots will "tidy" an arena of cubes by pushing them into piles.

This new version successfully improves upon teaching the learning goals, as it specifically connects to content covered in the lectures and readings for the course, and more accurately simulates embodied cognition.

Reflections

Working on this project has been both engaging and educational, and I've thoroughly enjoyed the opportunity to continue the work on a project I started in my undergrad. I'm hoping that work on this project continues well into the future.